[ad_1]

For the very first time, scientists have proven that artificial intelligence can deceive systems designed to detect deepfakes.

A portmanteau of “deep learning” and “fake”, deepfakes are videos designed to fool the viewer by merging real footage with artificially generated images.

While some might be funny, such as manipulating a politician’s mouth so it looks as though they’re saying something absurd, the technology is advancing rapidly and experts are concerned it will make damaging disinformation even more convincing.

Systems have been designed to detect when footage is a deepfake, but those systems can be fooled, researchers from the University of California San Diego revealed at a conference.

(Image: Getty Images)

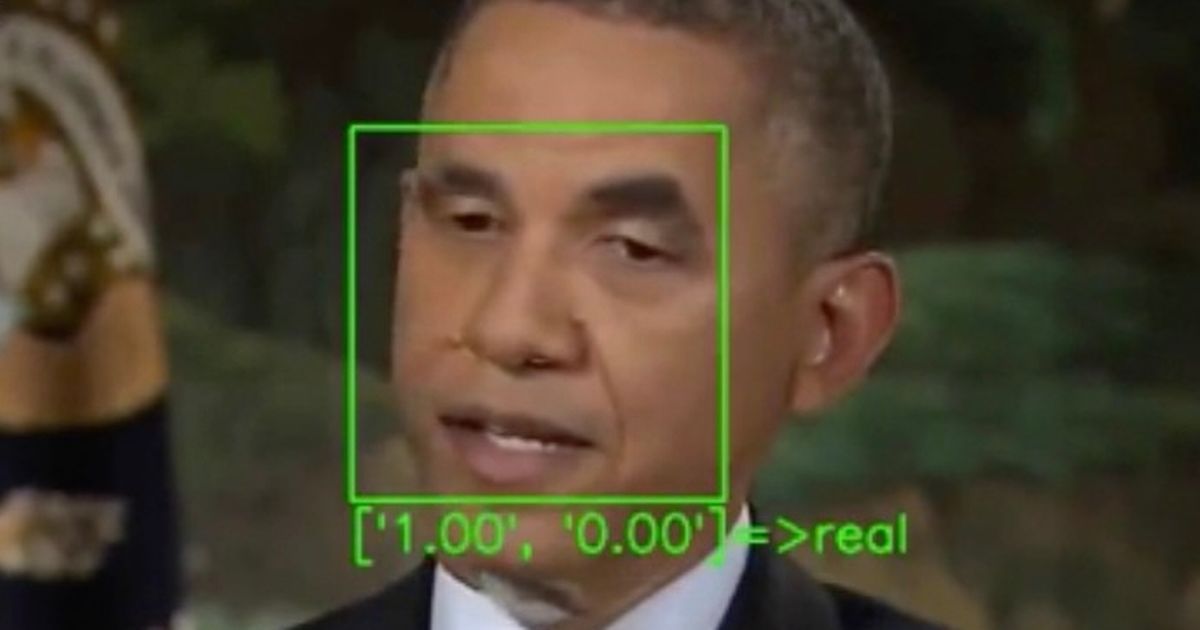

Typical deepfake detectors focus on faces in videos they’re analysing, tracking it and passing the data on to a neaural network that determines whether the face is real or not.

Detectors will often focus on unnatural blinking as deepfakes don’t tend to replicate the eye movement convincingly.

Speaking at the Workshop on Applications of Computer vision conference, held online in January, scientists demonstrated how detectors can be tricked by inserting inputs called “adversarial examples” into every frame of a deepfake video.

(Image: UNIVERSITY OF CALIFORNIA SAN DIEGO / NBC)

These examples are slightly manipulated inputs that cause AI systems, such as machine learning models, to make a mistake — and they work even after videos are compressed.

The most advanced deepfake detectors rely on these machine learning models to function, so the news is troubling to say the least.

“Our work shows that attacks on deepfake detectors could be a real-world threat,” computer engineering Ph.D. student Shehzeen Hussain told the conference.

(Image: PA)

“More alarmingly, we demonstrate that it’s possible to craft robust adversarial deepfakes in even when an adversary may not be aware of the inner workings of the machine learning model used by the detector.”

Computer science student Paarth Neekhara added: “If the attackers have some knowledge of the detection system, they can design inputs to target the blind spots of the detector and bypass it.”

The researchers created an adversarial example for every face in a video frame, built to withstand compressing and resizing which will usually remove them.

The modified version of the face is then inserted into every frame.

(Image: Getty Images/iStockphoto)

The study authors tested their deepfake attacks in two different scenarios. In one, attackers had full access to the detector model, including the face extraction pipeline and the architecture and parameters of the classification model.

In the other the attackers could only query the machine learning model to work out the probabilities of a frame being classified as real or fake.

The attack’s success rate in the first scenario was 99% for uncompressed videos and 84.96% for compressed.

In the second scenario, the success rate was 86.43% for uncompressed and 78.33% for compressed videos.

(Image: Getty Images/Cultura RF)

It’s the first work that demonstrates successful attacks on state-of-the-art deepfake detectors.

“To use these deepfake detectors in practice, we argue that it is essential to evaluate them against an adaptive adversary who is aware of these defenses and is intentionally trying to foil these defenses,”‘ the researchers said.

“We show that the current state of the art methods for deepfake detection can be easily bypassed if the adversary has complete or even partial knowledge of the detector.”

[ad_2]

Source link